Cerebras Inference API

Gateway

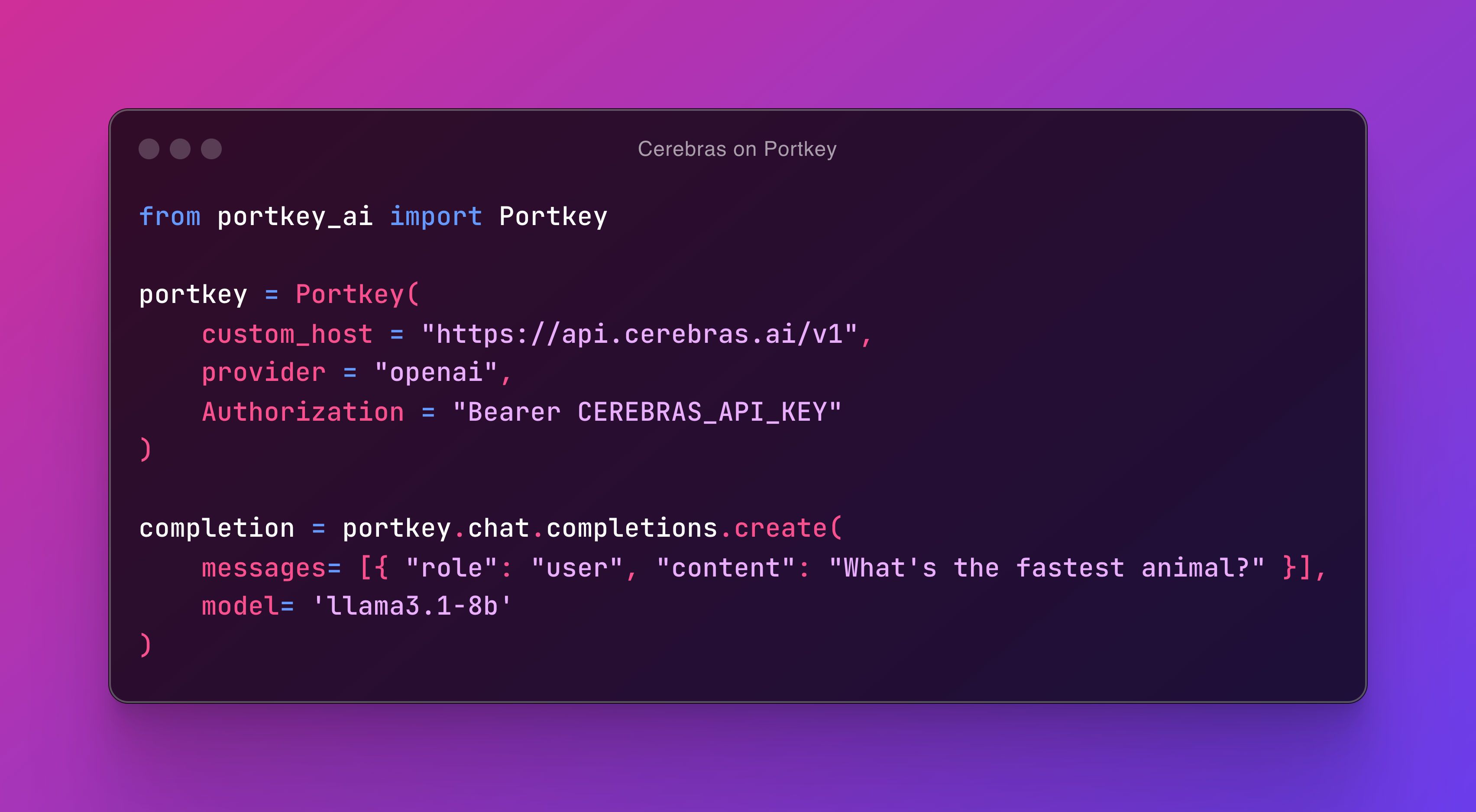

Get incredible inference speed with the Cerebras API: 1800 tokens/sec on the Llama 3.1 8B model. And you can easily route to the Cerebras API using Portkey:

Explore docs: https://docs.portkey.ai/docs/integrations/llms/cerebras